Offline AI Vision with Ollama

February 15, 2025

Read on Medium- chatbots

- multimodal

- llama-3

- ai

- ollama

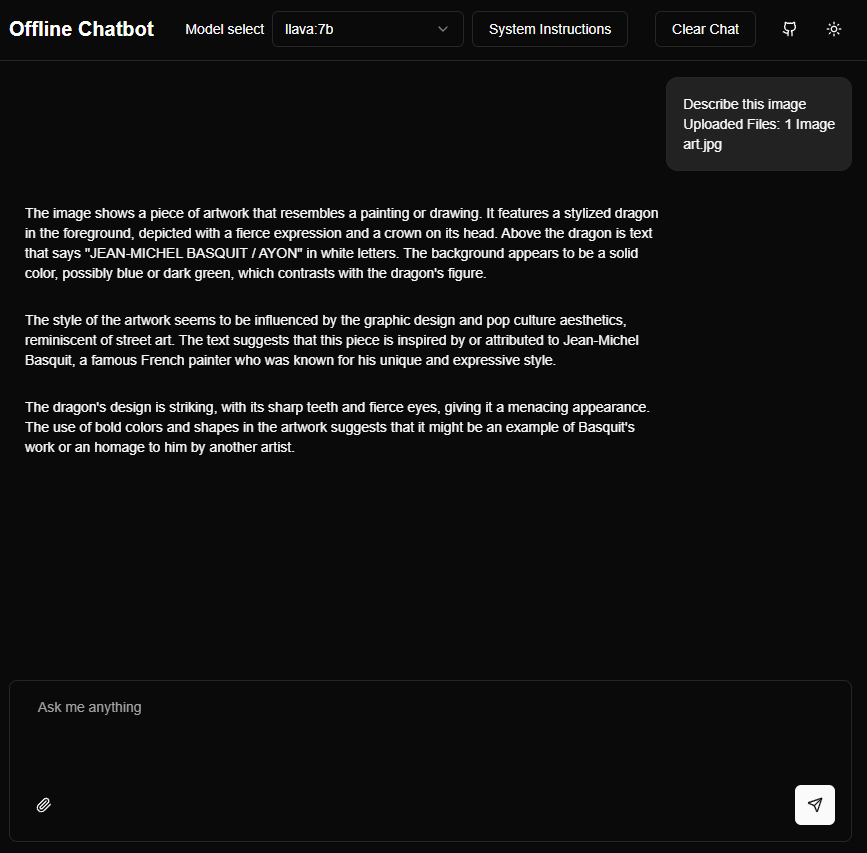

Building on my previous guide about Ollama chatbots, let’s explore how to leverage AI vision capabilities offline. Using models like LLaVA (Large Language-and-Vision Assistant), you can analyze images locally without relying on cloud services or API costs.

Setting Up AI Vision Models

If you’ve already installed Ollama, you’re just one command away from using AI vision. If not check out my last post for a quick setup of Ollama. Here’s how to get started:

Download LLaVA models:

ollama run llava:7bAnalyze Images:

# Navigate to desired image directory and run

ollama run llava "describe this image: ./art.jpg"

# Or if already running ollama

describe this image: ./art.jpgAvailable Vision Models

Several powerful vision models are available through Ollama:

- LLaVA — Excellent all-around vision model

- Llama 3.2 Vision — Latest vision capabilities from Meta

- Browse more options in the Ollama Vision Model Library

- Original blog post by Ollama on the topic

Integrating Vision Into Your Apps

Ollama provides official libraries to easily add vision capabilities to your applications:

Here’s a quick Python example:

import ollama

res = ollama.chat(

model="llava",

messages=[

{

'role': 'user',

'content': 'Describe this image:',

'images': ['./art.jpg']

}

]

)

print(res['message']['content'])Building an Offline Vision Chatbot

I’ve updated my previous offline chatbot implementation to include vision capabilities using Ollama’s JavaScript library. The main additions include:

- Logic for converting uploaded images to base64 format

- Support for analyzing multiple image formats (currently .png and .jpg)

- Integration with AI vision models like LlaVa and Llama 3.2

You can check out the complete implementation in my updated project repository.

By leveraging Ollama’s vision models, you can create powerful multimodal applications that run completely offline. This opens up new possibilities for privacy-focused AI applications while eliminating the need for external API costs.

Thanks for reading! I’m a full-stack developer specializing in React, TypeScript, and Web3 technologies.

Check out more of my work at mrmendoza.dev

Find my open-source projects on GitHub

Connect with me on LinkedIn

Let me know if this article was helpful or anything you’d like to see next.